New Research Helps Robots Grasp Situational Context

August 14, 2025

|

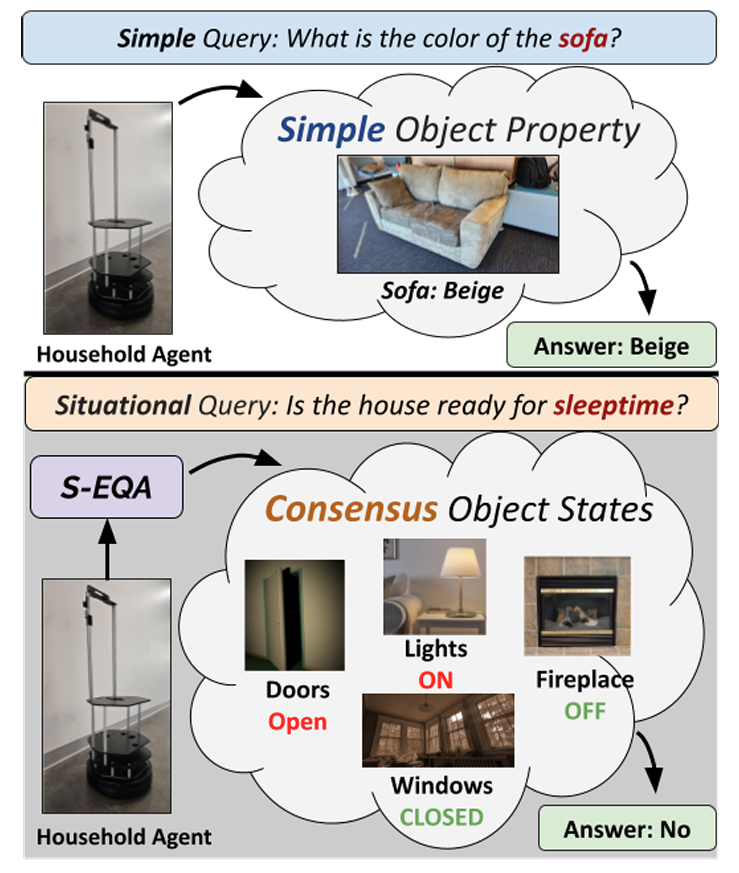

| In S-EQA, queries require inspecting multiple objects and determining their states to form a consensus answer. Using an LLM, the team generated S-EQA data in VirtualHome with verified object states and relationships. Evaluation of LLMs on S-EQA showed strong performance in generating queries but weaker alignment in answering them, indicating limits in commonsense reasoning for this task. |

The work began during an internship in Summer 2023 when UMD Ph.D. student Vishnu Dorbala worked with Amazon’s Artificial General Intelligence (AGI) group. Over the past two years at the University of Maryland, Dorbala has significantly extended and refined the project in collaboration with ISR Professor and Executive Director of Innovations in AI Reza Ghanadan and Distinguished University Professor Dinesh Manocha (ISR/CS/ECE). This sustained effort resulted in major advances in algorithm design and dataset development for Embodied AI.The research addresses a cutting-edge challenge in Embodied AI: how to design intelligent agents that not only respond to simple commands but can also reason about complex, real-world situations in human environments.

"Mobile robots are expected to make life at home easier. This includes answering questions about everyday situations like 'Is the house ready for sleeptime?',” said Dorbala. “Doing so requires understanding the states of many things at once, like the doors being closed, the fireplace being off, and so on. Our work provides a novel solution for this problem using Large Language Models (LLMs), paving the way towards making household robots smarter and more useful!"

Using an LLM, the team generated S-EQA data in VirtualHome with verified object states and relationships. A large-scale Amazon Mechanical Turk study confirmed data authenticity. Evaluation of LLMs on S-EQA showed strong performance in generating queries but weaker alignment in answering them, indicating limits in commonsense reasoning for this task.

Teaching Robots to “Get It”

In the field of Embodied Question Answering (EQA), robots are trained to navigate their surroundings and answer questions based on their visual observations. However, until now, most EQA systems have been limited to responding to simple, object-specific prompts, such as identifying the color of a couch or locating a knife on the counter.

The ISR-led team is shifting that paradigm.

Instead of asking, “Where is the light switch?” their system tackles far more complex queries, like “I'm traveling out of town for a few days, is the house all set?” This type of question requires understanding multiple elements within a space and how they relate to one another. For example, are the lights off? Are the doors closed? Are the windows locked? Is the thermostat setting appropriate for travel if no one is home? Is there enough food for my cat in the food dispenser? Are the laundry machines empty and off? These are what the team calls situational queries and answering them requires a level of consensus-based reasoning that today’s AI systems typically lack.

|

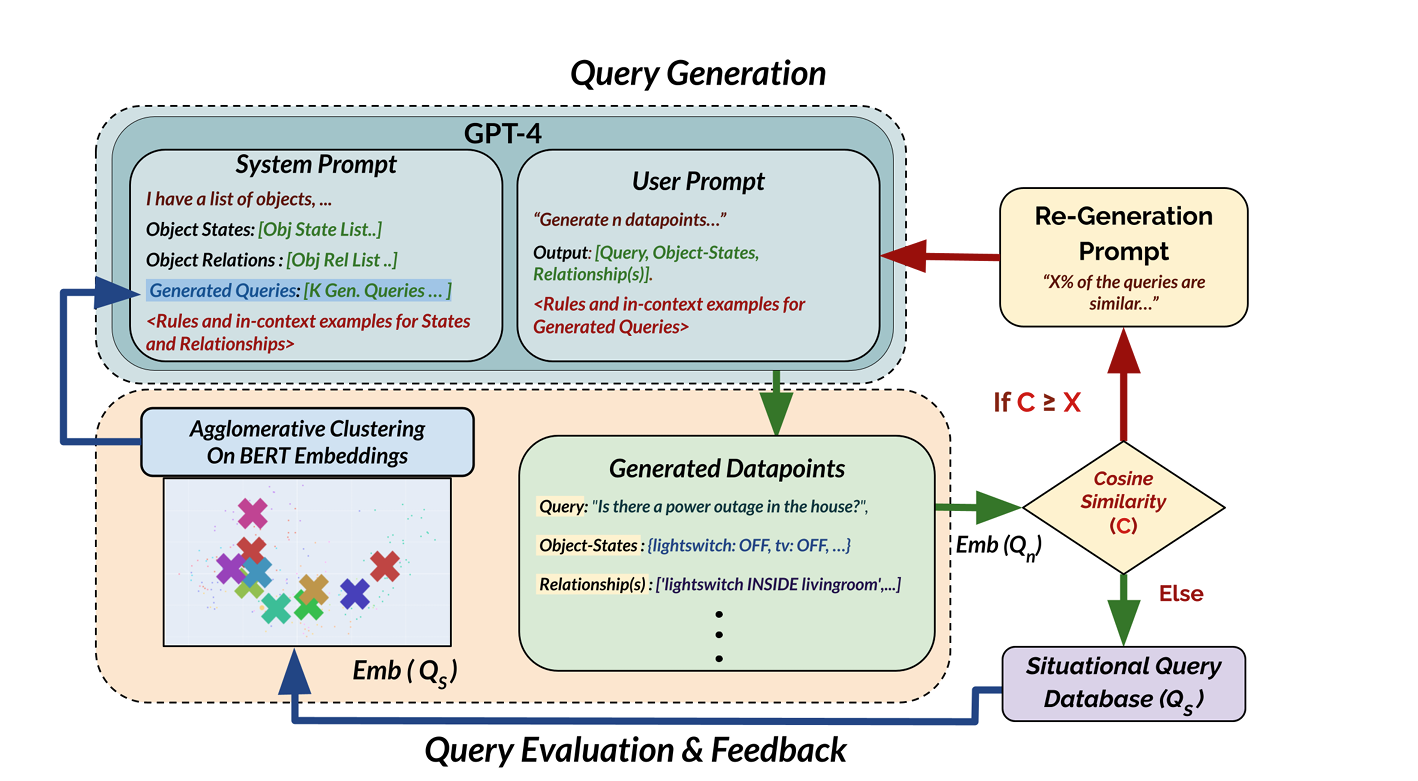

| The team used GPT-4 to generate situational datapoints consisting of queries, consensus object states, and relationships. Data generation occurred over multiple iterations, with prompts refined after evaluating each batch. BERT embeddings were used to compare new queries with those in the existing Situational Query Database, ensuring novelty. Clustering methods identified representative queries for feedback. The process highlights the iterative pathway for producing high-quality, diverse situational questions. |

A Novel Algorithm and a First-of-Its-Kind Dataset

To address this challenge, the researchers developed a new algorithmic framework called the Prompt-Generate-Evaluate (PGE) scheme. This approach leverages an LLM to create rich, situational questions and generate the underlying object-state information required to answer them, followed by an evaluation phase to ensure contextual accuracy. This combination of generation and structured reasoning represents a new way of operationalizing LLM outputs for embodied agents, enabling them to handle complex, multi-object reasoning tasks.

They employed this technique to create a novel dataset of 2,000 situational queries within a simulated household environment known as VirtualHome. To ensure the AI-generated questions reflected real human expectations, the team ran a large-scale validation study using Amazon Mechanical Turk. The result? A remarkable 97.26% of the AI-generated queries were deemed answerable by human consensus.

The Gap Between Understanding and Execution

The team also evaluated how well language models could answer the situational queries they had created, with results revealing a striking gap. While the LLMs were highly effective at generating realistic situational questions, their answers only aligned with human responses 46% of the time.

LLMs can mimic human reasoning in generating complex scenarios, but they still struggle to make accurate judgments without structured scene information. By exposing these gaps, the research lays the groundwork for future advances in AI systems that combine perception, reasoning, and action. These findings could help bring truly intelligent home assistants and service robots closer to reality.

Looking Ahead

This work is the first to propose both the concept of situational queries and a generative approach to creating them for embodied agents.

"As AI systems progress toward AGI and greater autonomy, the ability to reason over complex, multi-object environments becomes essential. Our collaborative work between the University of Maryland and the Amazon AGI team on situational Embodied Question Answering (S-EQA) showcases how distributed agents can transcend reactive behaviors to deliver consensus-driven, context-aware decisions,” said Ghanadan. “This marks a pivotal advancement in agentic AI—enabling systems that are not only intelligent but also robust, reliable, and ready for deployment in practical, real-world scenarios where nuanced understanding and adaptability are critical."

It sets a new benchmark in embodied AI, opening the door to real-world applications where robots must make informed, context-aware decisions.